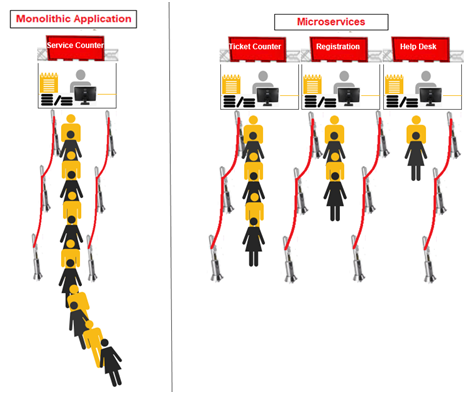

Most organisations are moving away from monolithic systems and adopting microservice architecture to build scalable, resilient applications. Unlike traditional designs, microservices architecture allows teams to develop, deploy, and scale services independently. As adoption grows, service mesh architecture and Kubernetes microservices play a critical role in managing communication, security, and observability across distributed services.

This blog explains how microservice architecture works, why organizations require service mesh at scale, and how Anypoint Service Mesh extends governance across both MuleSoft and non-MuleSoft services.

What is a Microservice?

A microservice is the end product of microservice architecture, where each service is fully functional, independently deployable, and responsible for a single business capability. Each microservice owns its data model and communicates with other services through well-defined APIs.

Because microservices are loosely coupled, other services, programs, and applications can access them without creating dependencies across the entire system.

Advantages of Using Microservices

- Within a microservices architecture, different teams develop, test, and deploy each service independently while maintaining technology neutrality.

- Microservices are robust and support high availability because each service focuses on a single responsibility.

- Teams scale each service independently based on real-time demand.

- Microservices improve fault tolerance since the failure of one service does not interrupt other running services.

Disadvantages of Using Microservices

Despite the operational benefits of microservice architecture, organisations must address several complexity and management challenges

- Increased complexity when managing multiple independently deployed services

- Operational overhead related to monitoring, logging, and service coordination

- Greater networking and communication requirements between services

What is a Service Mesh?

To address the operational limitations of microservice architecture, the concept of a service mesh architecture emerged.

A service mesh acts as an abstraction layer between services and the underlying network. The service mesh governs every API request and manages traffic routing, security, and observability.

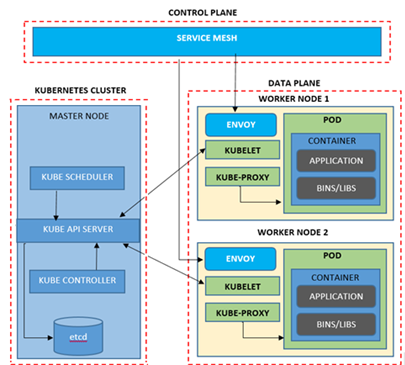

In a Kubernetes environment, teams divide the architecture into a control plane and a data plane. Applications communicate through sidecar proxies, commonly Envoy, which run alongside each service. These proxies manage service discovery, load balancing, traffic routing, and security policies.

Although a service mesh improves service communication and operational visibility, teams do not always recommend it for rapidly evolving applications because of the added complexity.

Common challenges include:

- Debugging issues across hundreds of microservices

- Deployment dependencies between services

- Network latency from remote service calls

- Increased resource utilisation

- Complex testing requirements

- Fragmented observability across services

Benefits of Service Mesh

Within large-scale microservices architecture, service mesh provides advanced traffic management, observability, and security controls.

Key benefits include:

Improved reliability using circuit breakers, which define request thresholds and manage service failures without disruption.

Runtime monitoring through dashboards that provide insights into application health, response times, failures, and workload distribution. Tools such as Kiali and Grafana are commonly used.

Enhanced security through enforced traffic encryption, authentication, and authorisation across all service communications. Envoy proxies block direct service-to-service communication by default.

Popular Service Mesh Tools for Kubernetes

Several service mesh tools are available to support Kubernetes-based microservices:

- AWS App Mesh

- Istio

- Linkerd

- Envoy

- Consul

- OpenShift Service Mesh

MuleSoft Anypoint Service Mesh

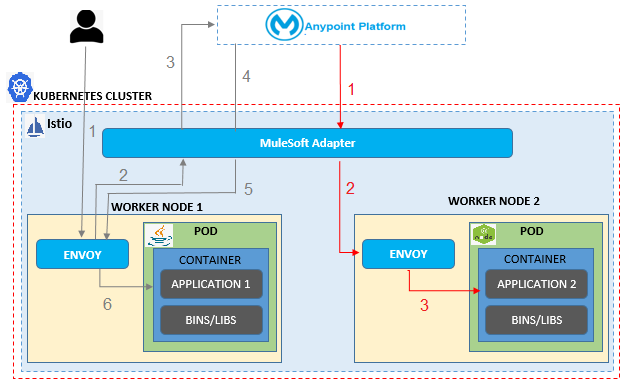

MuleSoft Anypoint Service Mesh functions as an API management layer for both MuleSoft and non-MuleSoft applications deployed in Kubernetes. It is implemented by installing the Mule Adapter within the Istio service mesh.

When external requests enter the Kubernetes cluster, traffic flows through Envoy proxies and is routed to the MuleSoft Adapter. Policies are validated before requests are passed back to the microservices. This approach allows organisations to apply Kubernetes-native security alongside Anypoint Platform policies.

Supported policies include rate limiting, SLA enforcement, JWT validation, and client ID enforcement for east-west traffic. Payload-based transformations are better suited for central API gateways rather than service mesh layers.

Key aspects of Anypoint Service Mesh include:

Centralised management through Anypoint Exchange, API Designer, and API Manager

Discovery of non-Mule APIs running inside Kubernetes

Conclusion

As cloud adoption accelerates, more organisations are embracing microservice architecture to build scalable, single-responsibility services. This shift has increased reliance on containerisation tools such as Docker and Kubernetes for orchestration.

However, the growing number of microservices introduces networking, security, and observability challenges. Service mesh technology addresses these concerns by enabling secure communication, traffic management, and detailed monitoring.

For enterprises operating large microservice ecosystems, Anypoint Service Mesh extends these capabilities by bringing enterprise-grade API governance to both MuleSoft and non-MuleSoft services.